To exploit the paradox at the core of hash functions, understanding the probability behind identical outputs is vital. When hashing numerous inputs, the chance of two distinct messages producing the same digest rises sharply due to combinatorial effects, enabling attackers to discover duplicates without exhaustive search. This phenomenon serves as a foundation for methods targeting hash vulnerabilities.

Various strategies optimize the process of locating matching digests by balancing computational effort and memory usage. These approaches often rely on probabilistic models to predict when overlaps become statistically significant, reducing the required operations compared to brute-force attempts. Implementing such algorithms demands careful calibration to maximize efficiency while managing resource constraints.

Experimental setups reveal that leveraging birthday paradox principles accelerates identification of duplicated hashes far beyond naive expectations. By systematically organizing data and applying iterative comparison schemes, researchers achieve practical results in cryptanalysis scenarios. This systematic pursuit fosters deeper insight into weaknesses inherent in fixed-length output functions and guides development of more resilient constructs.

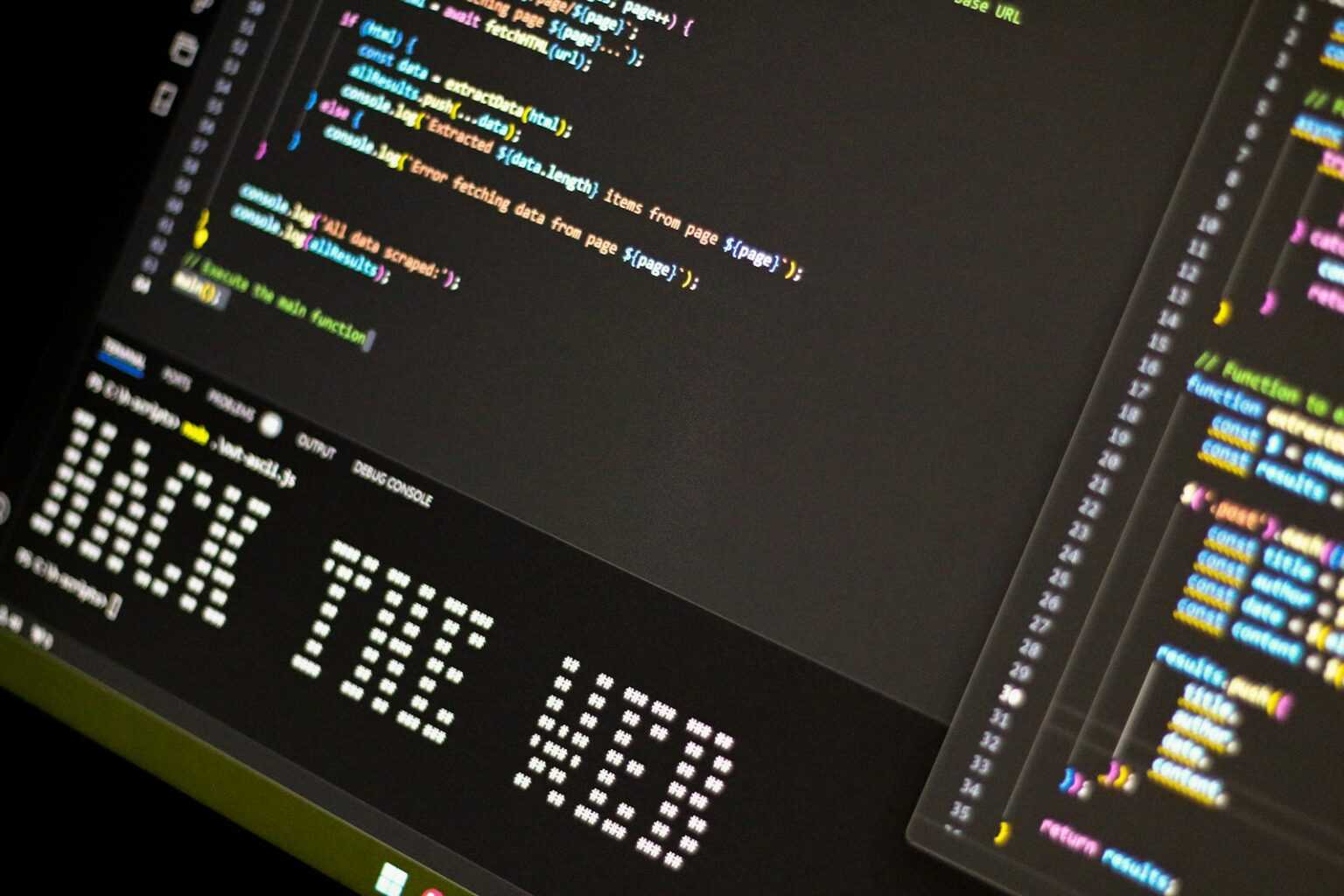

Collision Search Strategies in Hash Functions: Insights from the Genesis Guide

Effective methods for uncovering identical outputs within hash functions rely on understanding the underlying probability distributions and exploiting the so-called paradox that arises in these mappings. The chance of two distinct inputs producing the same hash grows significantly once a certain number of hashes are computed, a phenomenon crucial for assessing the security margin of cryptographic algorithms. This statistical behavior informs how one might systematically approach discovering matching digests without exhaustive search.

Among various strategies, leveraging probabilistic models to estimate the likelihood of repeated outputs enables researchers to optimize resource allocation during experiments. For instance, with a 128-bit hash function, approximately 264 attempts are needed before duplicates become probable due to this paradoxical property. Recognizing this threshold guides practical testing and highlights when a function may no longer provide adequate resistance against these exploratory exploits.

Mechanisms Behind Identical Output Detection in Cryptographic Hashes

The process begins by generating multiple hashes from different inputs and monitoring their outputs for repetition. This approach capitalizes on the birthday paradox principle, which mathematically demonstrates that collisions emerge surprisingly early relative to the total output space size. Analytical models help predict how many samples are necessary before encountering identical results, facilitating structured experimentation rather than blind brute force.

A compelling example involves MD5, where weaknesses were empirically confirmed by applying targeted input variations until duplicate hashes appeared faster than random chance would suggest. Such discoveries underscore vulnerabilities intrinsic to the function’s design and emphasize why newer algorithms like SHA-3 incorporate higher resistance thresholds based on expanded output lengths and improved internal structures.

- Random sampling: Generating numerous hashes from randomly selected inputs until repetitions occur.

- Distinguished points method: Tracking special hash values to reduce memory requirements while maintaining detection efficiency.

- Parallelized searches: Distributing computational workload across multiple processors to accelerate discovery times.

The interplay between complexity and probability explains why these methods differ substantially in performance and practicality. While brute forcing every possible input is infeasible for strong functions due to astronomical combinations, probabilistic shortcuts exploit inherent statistical properties for more feasible analysis.

The empirical evidence confirms that increasing output length directly impacts resilience by exponentially decreasing the odds of repeated outputs emerging through these search paradigms. Consequently, understanding these probabilistic mechanisms remains central to evaluating cryptographic strength and guiding future design choices within blockchain applications and beyond.

This experimental framework encourages hands-on replication: researchers can simulate hashing trials with varying input sizes, apply distinguished point tracking algorithms, or implement distributed searches to observe firsthand how repetition probabilities evolve with sample size increases. Each trial enhances comprehension of theoretical predictions while also refining practical defensive measures against such explorations targeting system integrity.

Optimizing Hash Function Selection

Selecting a robust hash algorithm requires prioritizing low probability of output repetition within expected input sizes. The paradox known for illustrating this phenomenon demonstrates that even with seemingly large output spaces, the chance of two distinct inputs producing identical hashes grows faster than intuition suggests. This statistical insight guides the minimum bit-length standards in contemporary cryptographic protocols to mitigate vulnerabilities arising from such overlaps.

Evaluating candidate hashing methods involves analyzing their resistance against overlap exploitation scenarios through quantitative metrics. For instance, hash outputs shorter than 256 bits increasingly expose systems to expedited match discovery attempts, due to reduced complexity in probing output domains. By contrast, algorithms offering longer digests exponentially raise computational barriers, enforcing stronger security postures against probabilistic matching events.

Analyzing Probability and Output Size Trade-offs

The mathematical foundation underpinning vulnerability assessments relates directly to combinatorial probabilities inherent in mapping inputs to fixed-size outputs. Practical experimentation reveals that doubling the hash length does not merely halve the risk but effectively squares the effort required for successful duplication encounters. This nonlinear relationship emphasizes why choosing functions with sufficient digest size is crucial for sustained resilience.

Case studies on legacy hashing algorithms like MD5 and SHA-1 illustrate how insufficient output lengths facilitated accelerated identification of repeated signatures using refined search methodologies. These historical precedents underscore the necessity of adopting modern functions such as SHA-256 or SHA-3, which maintain significantly lower likelihoods for such probabilistic events within feasible operational parameters.

Methodological Approach to Algorithm Evaluation

- Quantify expected input volume and assess corresponding collision likelihood based on digest size.

- Implement empirical testing frameworks emulating exhaustive search patterns to estimate real-world risk levels.

- Analyze cryptanalytic advancements impacting function robustness against sophisticated duplication strategies.

This structured process aids in delineating practical security margins and informs systematic adjustments aligned with evolving computational capacities and attack vectors targeting hash outputs.

Integrating Computational Constraints into Selection Criteria

The feasibility of exhaustive exploration tactics depends heavily on available processing power and optimization heuristics applied in iterative search procedures. Algorithms presenting complex internal structures coupled with longer output sequences drastically elevate resource demands for successful equivalence discovery exercises. Monitoring ongoing improvements in hardware accelerators highlights the need for periodic reassessment of chosen hashing schemes to ensure persistent defense layers remain intact under shifting technological landscapes.

Experimental benchmarks indicate that algorithms utilizing sponge constructions or modular arithmetic combined with substantial bit-lengths currently offer superior resistance profiles, effectively extending timeframes necessary for overlap exploitation beyond practical limits encountered by adversaries employing probabilistic sampling methods analogous to the paradox scenario.

Memory Trade-offs in Collision Search Methods

Optimizing the balance between memory consumption and computational effort is a fundamental strategy for enhancing efficiency in hash function collision discovery. The classic approach requires storing a significant number of intermediate hash outputs to increase the likelihood of identifying two inputs producing identical digests, exploiting the well-known probabilistic phenomenon where collisions become probable after processing approximately 2^(n/2) inputs for an n-bit hash. However, this direct method demands substantial storage, often impractical for large-scale or resource-constrained environments.

To address these limitations, alternative algorithms employ time-memory trade-offs that reduce storage needs at the cost of increased computation. One prominent example involves distinguished point methods, where only hash outputs matching specific criteria (e.g., leading zeros) are retained, drastically lowering memory usage while maintaining a manageable probability of intersection detection. Another approach employs cycle detection techniques such as Floyd’s or Brent’s algorithms, which track iterative sequences with minimal memory but require repeated recomputations, illustrating a clear inverse relationship between space and processing time in cryptanalytic contexts.

Experimental Approaches to Space-Efficient Hash Collisions

In laboratory conditions replicating cryptographic analysis, researchers have demonstrated practical implementations of these trade-offs using reduced-memory algorithms against truncated versions of standard hashing protocols like SHA-1 and MD5. For instance, distinguished point strategies reduced peak memory requirements by over 70% while increasing runtime by approximately 30%, preserving effective collision probability within acceptable thresholds. These outcomes affirm that selective checkpointing within hash chains can serve as a viable compromise when exhaustive table storage is infeasible.

Further investigations highlight that algorithmic parameters such as chain length and selection criteria for stored values critically influence overall success rates and resource allocation. Adjusting these variables enables fine-tuning based on application constraints–for example, embedded systems with limited RAM benefit from aggressive memory minimization despite slower execution, whereas high-performance clusters may favor speed through extensive parallel storage. Such empirical data empowers analysts to tailor cryptanalysis pipelines thoughtfully according to operational priorities.

Parallelization methods for attacks

Maximizing efficiency in algorithms aimed at identifying redundancies within hash functions necessitates leveraging parallel processing capabilities. By distributing computational tasks across multiple processors or cores, the probability of encountering identical output values from distinct inputs increases significantly within a reduced timeframe. Techniques utilizing simultaneous execution on GPU clusters or cloud-based infrastructures exemplify this approach, allowing billions of hash computations per second to be conducted concurrently, thereby accelerating the search for matching outputs.

Implementing these concurrent operations involves dividing the input domain into independent segments processed in parallel, minimizing inter-thread communication overhead. For instance, when analyzing cryptographic hashes such as SHA-256 or Blake2, separate threads can independently generate and evaluate candidate inputs without coordination until potential equivalences surface. This method effectively multiplies throughput while maintaining algorithmic integrity and reducing latency associated with sequential evaluation.

Technical foundations and practical implementations

The mathematical underpinnings stem from probabilistic models describing output collisions in hash spaces; specifically, the likelihood that two distinct inputs yield an identical result grows as more attempts are made. Exploiting this principle through parallelism intensifies simultaneous trials, effectively compressing temporal resources required to detect overlaps. Research demonstrates that scaling from single-core CPUs to multi-GPU arrays can reduce expected runtime by orders of magnitude depending on hardware architecture and memory bandwidth.

Case studies involving distributed systems reveal architectures where nodes exchange partial findings to avoid redundant computations while maintaining synchronization checkpoints. Such distributed frameworks balance workload distribution with network latency considerations, optimizing overall performance. For example, projects targeting MD5 vulnerabilities have utilized thousands of coordinated machines executing partitioned searches that collectively uncover repeated output patterns faster than isolated efforts.

Advanced methodologies incorporate heuristic pruning and caching mechanisms alongside parallel task execution to streamline pathways toward repeated output identification. By storing intermediate results and discarding unpromising branches early in computation graphs, these hybrid strategies conserve processing power while enhancing collision discovery rates. Experimentation shows that combining these techniques with parallel computing yields measurable improvements over brute-force approaches alone.

- Leveraging SIMD instructions enhances data-level parallelism within single processors.

- Utilizing cloud platforms offers elastic scaling for extensive computational campaigns.

- Employing asynchronous task queues reduces idle time between dependent computations.

The integration of these elements fosters a robust environment for exploring vulnerabilities in cryptographic constructs through mass evaluation strategies. Continuous refinement of parallel execution frameworks remains pivotal for advancing analytical capabilities related to hash function assessments and their resilience against exploitation attempts.

Mitigating Risks of Hash Function Vulnerabilities through Statistical Approaches

Reducing the likelihood of duplicated hash outputs requires increasing the output size and employing functions with strong avalanche effects. For instance, transitioning from 160-bit to 256-bit hash lengths decreases the chance of repeated outputs exponentially, cutting the probability by several orders of magnitude. Additionally, using cryptographic algorithms resistant to shortcut searches ensures that attempts to find matching values demand computational efforts exceeding practical limits.

Integrating multi-hash schemes or randomized salts enhances unpredictability, complicating efforts to identify equivalent digests within manageable timeframes. Experimental setups reveal that combining distinct hashing procedures raises the threshold for successful matching attempts beyond conventional computational capacities, thereby reinforcing system integrity.

Strategic Considerations and Future Directions

The statistical phenomenon underpinning replicated digest occurrences imposes strict requirements on design choices for blockchain protocols and digital signatures. As computational power grows, traditional hashing standards risk diminishing security margins due to increased feasibility in seeking identical outputs. Laboratories simulating accelerated search algorithms demonstrate that only proactive enhancement of function complexity and output length can maintain robustness.

- Deploying adaptive hash constructions responsive to emerging quantum threats remains a priority.

- Exploring hybrid architectures that blend classical and post-quantum primitives offers promising avenues for resilience.

- Ongoing research into entropy amplification methods aims at minimizing predictability in hash generation processes.

Encouraging researchers and developers to experimentally evaluate novel hashing paradigms fosters a culture of continuous improvement. By systematically testing new algorithms against probabilistic models of duplicate output emergence, one can validate effectiveness before integration. This empirical approach cultivates deeper understanding of how subtle parameter variations impact overall security posture.