To achieve a reliable beacon that delivers unpredictable outputs, integrating multiple independent sources across a network is imperative. A single origin often suffers from biases or predictability due to environmental or systemic constraints, whereas combining diverse inputs enhances the overall uncertainty pool, making the final value significantly harder to anticipate.

Utilizing joint contributions from several nodes reduces dependence on any one participant’s trustworthiness or hardware characteristics. This approach distributes the task of creating uncertainty, ensuring no single failure or compromise can deterministically influence the outcome. Such synergy enables continuous refresh of randomness with measurable entropy accumulation from each source involved.

Practical implementation involves protocols that aggregate and mix bits collected asynchronously over time, verifying freshness and independence before incorporation. Emphasis on detecting potential correlations among inputs further strengthens robustness. Experimenting with entropy extraction methods and threshold schemes reveals optimal parameters for maximizing unpredictability while maintaining efficiency within the distributed framework.

Distributed randomness: collaborative entropy generation

The creation of an unpredictable beacon within a decentralized network demands meticulous design to ensure the integrity and impartiality of the resulting values. Utilizing multiple independent sources as contributors prevents any single point from influencing the output, enhancing trust in the system’s impartiality. This approach applies cryptographic techniques such as verifiable delay functions (VDFs) or threshold signatures to amalgamate inputs, ensuring that no participant can predict or manipulate the final signal prematurely.

Networks leveraging multi-party protocols coordinate contributions from distinct nodes, each providing input derived from local physical phenomena or computational processes. By integrating these various inputs through secure aggregation methods, the combined outcome achieves elevated unpredictability and resistance against adversarial control. Such mechanisms are critical for applications like consensus protocols, lotteries, and secure key generation where unbiased data is paramount.

Technologies and methodologies in collective value production

Implementations often employ beacon systems that continuously emit fresh sequences based on aggregated participation by numerous peers. For instance, Algorand’s sortition mechanism incorporates cryptographic sortition coupled with random seeds sourced from prior rounds, enabling each node to independently verify eligibility without revealing sensitive data beforehand. Similarly, drand–a widely adopted public randomness beacon–relies on a threshold signature scheme among distributed servers to produce publicly verifiable outputs at fixed intervals.

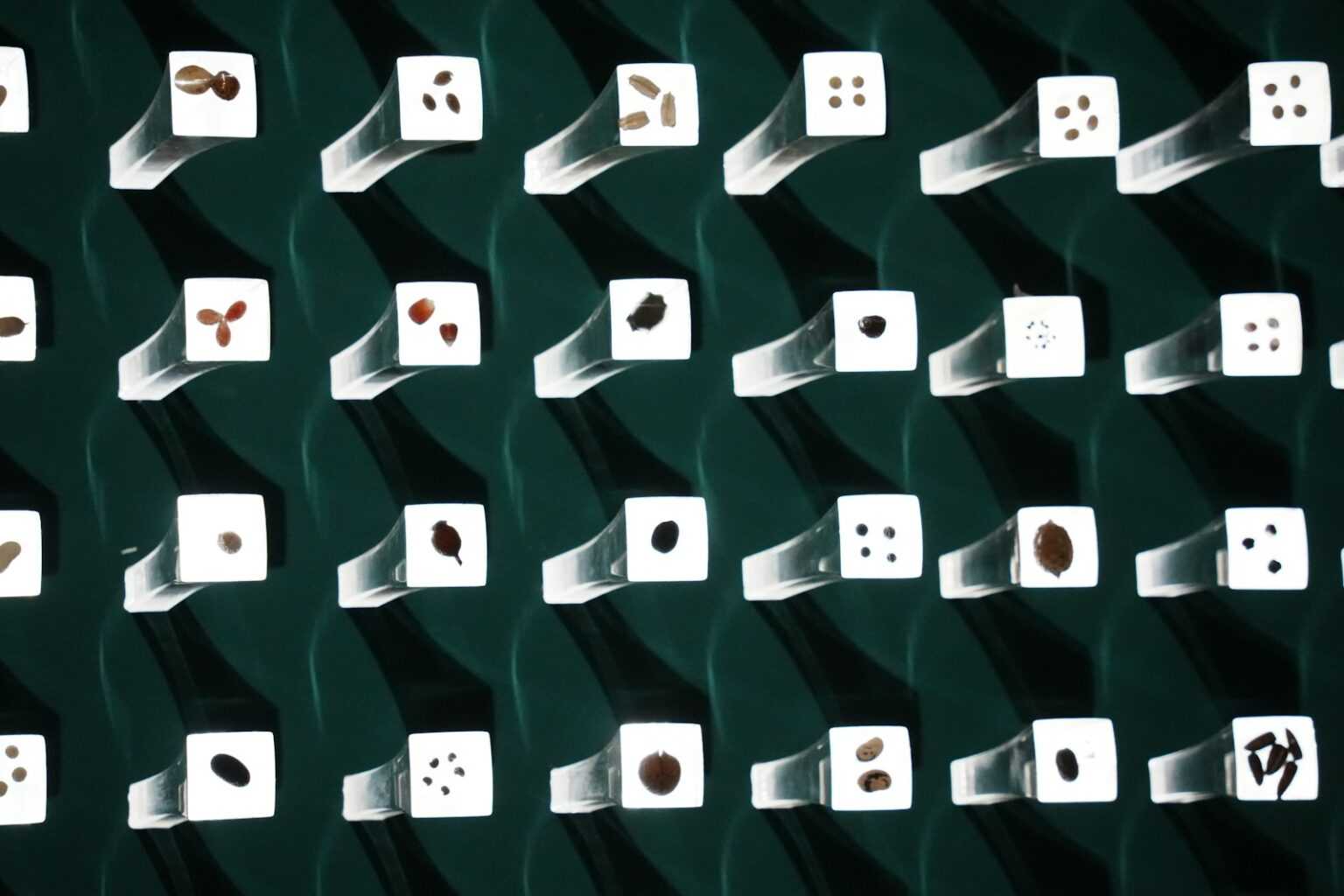

Experimental setups demonstrate how mixing entropy pools derived from hardware noise generators across a broad network enhances statistical quality compared to isolated devices. Researchers have observed that combining physical random number generators with pseudo-random functions mitigates biases inherent in individual devices, improving overall robustness. Stepwise validation includes periodic statistical testing suites (e.g., NIST SP 800-22 tests) applied on collected samples to confirm sustained unpredictability under varying network conditions.

- Step 1: Collect raw signals from heterogeneous sources (thermal noise, clock jitter).

- Step 2: Apply cryptographic extractors to distill uniform bits.

- Step 3: Aggregate processed bits using threshold schemes or secret sharing protocols.

- Step 4: Publish results with proofs enabling external verification.

The implications extend beyond blockchain consensus; secure voting systems and privacy-preserving computations benefit significantly by incorporating such collaboratively produced signals as foundational components. In practice, achieving fault tolerance involves selecting robust subsets of participants while maintaining liveness guarantees despite partial network failures or malicious actors attempting prediction attacks.

The progression toward reliable collaboration in unpredictable source integration remains an active research frontier demanding rigorous experimentation across diverse environments. Understanding subtle correlations between contributor inputs and refining extraction mechanisms can reveal new pathways to improve unpredictability levels further. Encouraging hands-on exploration through testnets or simulation frameworks aids practitioners in developing intuition about failure modes and optimization opportunities within this vital domain of digital systems architecture.

Implementing Multi-Party Entropy Sources

The integration of multiple independent inputs into a unified source of unpredictability significantly enhances the robustness of entropy in blockchain networks. Combining values from various participants mitigates risks associated with single-point failures or adversarial manipulation, thereby ensuring that the resulting beacon reflects genuinely unpredictable outcomes. Practical implementations require carefully orchestrated protocols where each node contributes an input that, when aggregated, forms a high-quality seed for cryptographic operations.

Leveraging a distributed approach to creating randomness demands synchronization across network participants to prevent bias and ensure fairness. Techniques such as verifiable secret sharing and threshold cryptography enable partial contributions to be combined securely without revealing individual inputs prematurely. This collective process yields a beacon output that remains unpredictable until all parties have submitted their data, preserving the integrity of subsequent consensus or protocol decisions.

Technical Methodologies and Protocols

One effective method involves threshold signature schemes, where multiple nodes sign a message independently but only a subset is needed to produce a valid group signature. The aggregation mechanism combines these signatures into a single unpredictable value serving as the entropy source. Protocols like DKG (Distributed Key Generation) allow nodes to jointly compute private keys without centralized trust, facilitating secure random outputs resistant to manipulation by any minority coalition.

Another approach uses commit-reveal phases: participants first submit hashed commitments of their random shares and later reveal the original inputs. This two-step process prevents last-mover advantage attacks by ensuring no party can influence the final result after observing others’ contributions. Implementations such as drand demonstrate real-world deployment of these principles by operating public randomness beacons whose unpredictability is guaranteed through multi-party participation and rigorous cryptographic proofs.

A comparative examination reveals trade-offs between latency, throughput, and security guarantees in different designs. For example, asynchronous protocols tolerate network delays better but may introduce complexities in finalizing beacon outputs promptly. Conversely, synchronous schemes demand strict timing assumptions but often simplify verification steps. Selecting an appropriate construction depends on network topology, expected adversarial capabilities, and application-specific requirements regarding unpredictability assurance.

Experimental setups should explore combinations of these mechanisms tailored for diverse network conditions and threat models. Researchers can simulate adversarial behaviors by controlling subsets of contributors attempting bias or premature revelation attempts, thereby quantifying resilience levels under controlled laboratory scenarios. Monitoring output entropy via statistical tests confirms whether the synthesized beacon meets stringent unpredictability criteria required for secure cryptographic primitives.

The pursuit of reliable sources combining independent inputs encourages continuous innovation in protocol design and validation techniques. It invites hands-on experimentation with hybrid approaches–incorporating time-delay functions alongside multi-party aggregation–to balance speed against security guarantees effectively. Such systematic exploration fosters deeper understanding while progressively refining practical solutions that underpin trustworthy blockchain consensus systems worldwide.

Securing randomness against adversaries

Ensuring unpredictability in network-wide value creation requires protocols that resist manipulation by malicious actors controlling a subset of participants. One effective approach is the implementation of a beacon system where multiple independent nodes contribute partial inputs to produce a final unpredictable output. This method leverages fault tolerance and cryptographic commitments, preventing any single entity from biasing the outcome. For example, threshold cryptography schemes enable a predefined quorum of participants to collectively compute an unbiased result without revealing individual shares prematurely.

Robustness against adversaries also demands continuous refreshment of the entropy pool using verifiable delay functions (VDFs) or similar time-bound computations. These functions add sequential complexity, making early prediction or precomputation infeasible. The Ethereum 2.0 beacon chain employs such mechanisms combined with BLS signatures aggregated from committee members, ensuring fresh and unbiased values at regular intervals while maintaining resilience even if some validators act maliciously or go offline.

Technical safeguards for collaborative unpredictability

One critical safeguard involves designing incentive-compatible protocols that discourage collusion among network participants contributing to the random output. Game-theoretic models analyze potential attack vectors such as grinding attacks, where adversaries attempt to influence random values by selectively withholding inputs or retrying generation steps. By incorporating penalties for equivocation and rewarding honest participation, systems like Algorand mitigate these risks effectively. Additionally, publicly auditable proofs linked with each contribution enhance transparency and accountability, enabling external validation of randomness integrity.

The architecture of randomness beacons frequently combines multiple entropy sources derived from independent subsystems–such as hardware randomness modules embedded within nodes alongside protocol-level contributions–to increase overall unpredictability. Experimental deployments demonstrate that hybrid approaches reduce vulnerability since an attacker must simultaneously compromise several distinct components to gain control over outputs. Detailed performance analyses highlight trade-offs between latency, communication overhead, and security guarantees, guiding practical implementations tailored for permissionless blockchain networks.

Integrating distributed entropy in protocols

Reliable sources of unpredictable bits play a fundamental role in securing blockchain systems. To achieve unbiased and tamper-resistant output, implementing a beacon mechanism that aggregates unpredictable inputs from multiple nodes within a network is highly recommended. This approach mitigates single-point failure risks by combining contributions, thus enhancing the unpredictability and impartiality of the final outcome.

Protocols benefit significantly from incorporating collaborative schemes where participants sequentially or concurrently contribute to the signal pool. Such methods demand cryptographic guarantees ensuring each participant’s input remains concealed until commitment phases conclude. Consequently, this prevents adversaries from influencing or predicting subsequent values, preserving the integrity of the shared input.

Architectural considerations for secure signal sources

The design of a beacon system must carefully balance throughput and latency constraints inherent in consensus mechanisms. For example, Algorand’s verifiable random function (VRF)-based beacon provides rapid availability with cryptographic proofs that allow network nodes to independently verify outputs without centralized trust. In contrast, drand employs threshold signatures enabling fault tolerance by requiring only a subset of participants to generate valid randomness collectively.

Experimental evaluation reveals that integrating threshold signature protocols into consensus layers enhances resilience against node corruption while maintaining performance metrics within acceptable bounds. Stepwise implementation involves:

- Defining participant sets with weighted voting rights;

- Generating partial signatures on committed secrets;

- Combining shares to produce globally verifiable output;

- Publishing resulting values as beacon pulses available to all network actors.

This systematic process ensures each pulse serves as an unpredictable seed for leader election, sharding assignments, or smart contract initialization.

Empirical studies conducted on Ethereum 2.0’s RANDAO demonstrate how iterative mixing of inputs from validators creates a robust randomness source resistant to grinding attacks. However, vulnerabilities arise if adversaries control large validator subsets capable of withholding inputs strategically. Addressing these weaknesses includes introducing commit-reveal schemes with penalty enforcement and integrating external randomness beacons like NIST’s Randomness Beacon as supplemental entropy amplifiers.

The interplay between protocol complexity and security assurances guides system architects toward hybrid models combining internal multi-party unpredictability with external trusted beacons. This layered approach offers experimental pathways wherein developers can iteratively test resilience against adversarial strategies while measuring latency overheads and throughput impacts within live testnets.

A practical investigation might involve deploying a prototype beacon leveraging Schnorr multisignatures across geographically dispersed nodes simulating real-world network delays and adversarial behaviors. Observing the statistical distribution uniformity and resistance to bias during repeated trials will yield insights into protocol robustness and parameter tuning strategies necessary for production readiness.

Measuring Randomness Quality Collaboratively

To evaluate the unpredictability of a randomness source within a network, one must apply rigorous statistical testing combined with protocol-specific metrics. Common approaches include NIST SP 800-22 test suites and Dieharder tests, which assess uniformity, independence, and entropy levels. However, in a system where multiple participants contribute to the output, it becomes critical to analyze both individual input quality and the aggregation method’s resistance to bias or manipulation.

Beacons that aggregate inputs from diverse nodes serve as practical examples of collective uncertainty creation. Their outputs should exhibit minimal correlation with any single participant’s data stream, ensuring that no isolated entity can predict or influence the final value. Monitoring such systems requires continuous collection of intermediate values and verification against known randomness benchmarks to detect anomalies or degradation over time.

Methodologies for Assessing Joint Randomness

The process typically begins by modeling each contributing element as an independent entropy source with measurable min-entropy rates. Combining these through cryptographic primitives–such as verifiable random functions (VRFs) or threshold signatures–produces an aggregate beacon output. Experimentally, researchers measure unpredictability by simulating adversarial scenarios where subsets of participants attempt to skew results. The resilience is quantified by metrics like bias margin reduction and collision probability under partial corruption.

For instance, DRAND (Distributed Randomness Beacon) employs threshold BLS signatures enabling a set of nodes to jointly produce unpredictable outputs only when exceeding a quorum. Empirical studies show that even if up to one-third of nodes act maliciously, the beacon maintains statistical indistinguishability from ideal randomness sources. Such findings emerge from repeated rounds of output sampling combined with entropy extraction analyses using Shannon entropy calculations.

Implementing real-time monitoring frameworks within blockchain networks allows ongoing assessment of the beacon’s quality during operation. These tools track deviations in expected randomness distribution and flag irregular patterns potentially caused by network delays or coordinated attacks. By integrating cryptanalytic techniques alongside probabilistic models, operators gain actionable insight into maintaining robustness in collaborative uncertainty production systems.

Troubleshooting Failures in Collaborative Entropy Sources

Ensuring the integrity of unpredictable data streams within a decentralized network requires rigorous validation of each individual input and its integration. Faults often arise from synchronization issues, biased inputs, or insufficiently diverse contributors–conditions that degrade the quality of the shared beacon used for randomness extraction. Immediate steps include implementing cross-node consistency checks and employing cryptographic commitments to prevent premature disclosure of intermediate values.

Experimentation with layered fallback mechanisms provides resilience when one node fails or introduces predictable patterns. For example, hybrid schemes combining hardware-based noise sources with protocol-level mixing can restore unpredictability without sacrificing scalability. Additionally, proactive anomaly detection algorithms analyzing entropy contribution distributions help isolate malfunctioning nodes before they impact global outcomes.

Broader Implications and Future Directions

- Robustness through diversity: Incorporating geographically and technically heterogeneous sources enhances the collective unpredictability, minimizing correlated failures across the system.

- Adaptive beacon protocols: Designing dynamic coordination frameworks that adjust participation thresholds based on real-time health metrics strengthens reliability against adversarial manipulation.

- Transparency via audit trails: Recording verifiable logs of each contributor’s inputs allows for forensic analysis post-failure, informing iterative improvements grounded in empirical data.

- Integration with emerging primitives: Exploring quantum-resistant cryptographic techniques promises to future-proof these collaborative mechanisms as adversaries evolve.

The path forward lies in experimental deployments that measure statistical properties under varying network conditions while continuously refining consensus on source trustworthiness. By fostering methodical inquiry into failure modes and recovery strategies, we can elevate distributed random beacons from theoretical constructs to resilient infrastructure components underpinning secure blockchain protocols worldwide.