Utilize membership functions to represent linguistic variables with gradual transitions instead of binary distinctions. This approach allows models to handle uncertainty by quantifying the degree to which an element belongs to a set, enabling nuanced interpretation within computational frameworks.

Implementing inference mechanisms based on these graded memberships facilitates decision-making processes that mimic human-like judgment under ambiguous conditions. By applying operators designed for partial truth values, such methodologies extend beyond rigid true/false paradigms, offering flexibility in evaluating complex scenarios.

Designing control and classification architectures around these principles requires careful selection of membership definitions and aggregation rules. Experimentation with different function shapes–such as triangular, trapezoidal, or Gaussian–can optimize system responsiveness and accuracy depending on the application’s nature.

Integrating variable fuzziness into formal models enhances interpretability by linking numeric inputs with linguistic descriptions, bridging quantitative data and qualitative reasoning. This fusion supports adaptive algorithms capable of managing imprecise information common in real-world environments.

Fuzzy Reasoning in Blockchain Science: Harnessing Linguistic Variables for Enhanced Decision-Making

Implementing systems that mimic human-like judgment requires methodologies capable of handling imprecise and uncertain information. The utilization of approximate inferential frameworks enables the modeling of linguistic variables such as “high,” “medium,” or “low,” which are essential for interpreting qualitative data within blockchain environments. These constructs facilitate nuanced evaluations where binary true/false assessments fall short, particularly in scenarios involving transaction risk, consensus reliability, or smart contract validation.

Central to this approach is the deployment of membership functions that map input values onto degrees of truth, allowing for gradations rather than crisp categorizations. By defining overlapping sets with smooth transitions, these functions support gradual decision boundaries critical for adaptive protocols. For example, assessing network congestion levels through variable thresholds enhances throughput control mechanisms by adjusting parameters dynamically based on real-time conditions.

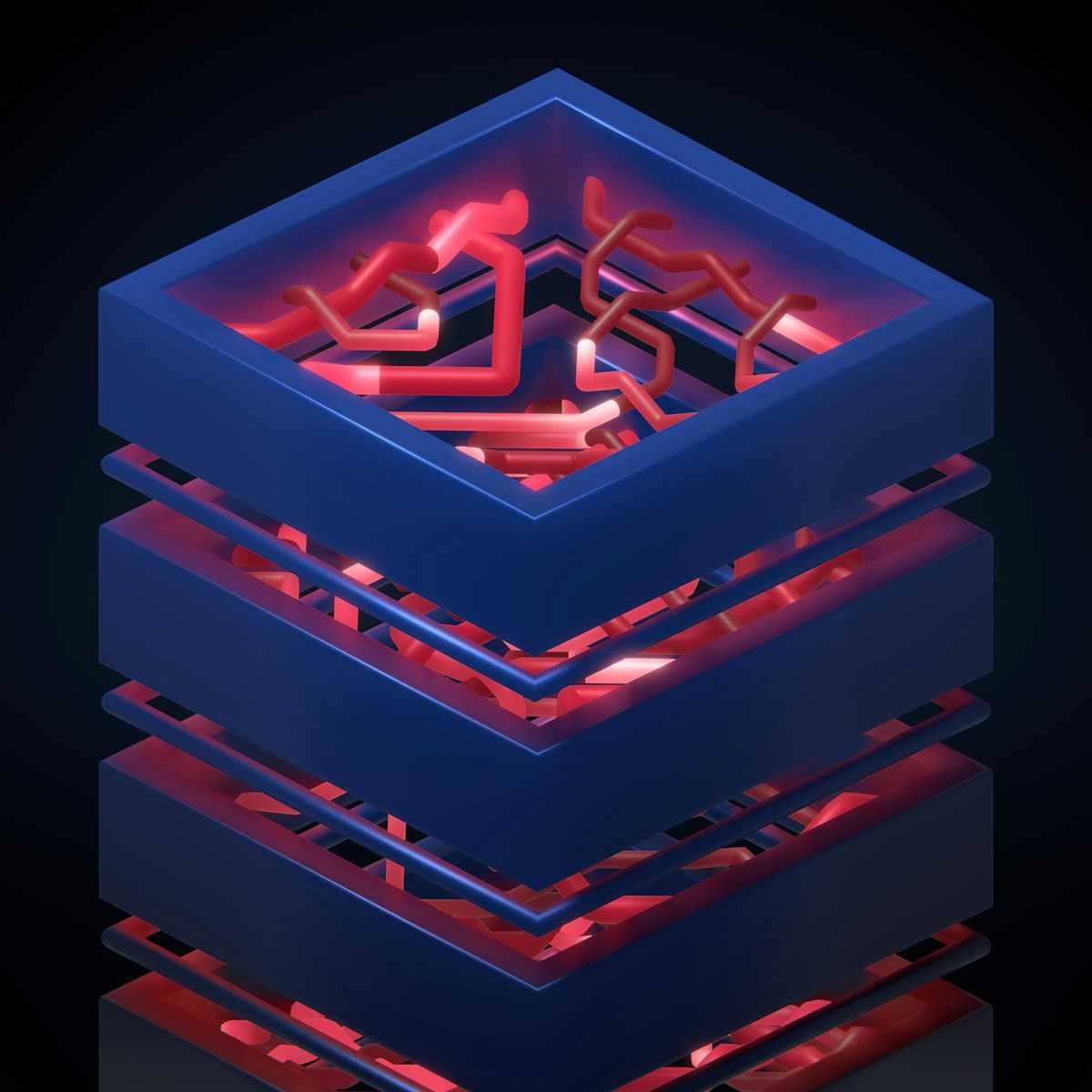

Integration of Approximate Inference Models into Blockchain Architectures

Incorporating approximate inferential methods within distributed ledger technologies offers a robust alternative to rigid rule-based algorithms. Such models excel in environments where data uncertainty emerges from node heterogeneity or incomplete information propagation. The application of linguistic descriptors to system states enriches consensus algorithms by enabling weighted voting schemes that consider degrees of trustworthiness instead of absolute endorsements.

Experimentation with adaptive function tuning demonstrates improved fault tolerance and resilience against adversarial attacks. For instance, dynamic adjustment of validator credibility scores using soft evaluation metrics allows blockchains to mitigate risks associated with Byzantine behaviors more effectively than traditional binary logic systems. Controlled laboratory setups employing simulated attack vectors corroborate significant gains in maintaining ledger integrity under stress conditions.

- Linguistic variable representation: Facilitates interpretation of ambiguous inputs such as transaction priority or miner reputation.

- Membership functions: Provide quantification of partial truths necessary for graded decision-making processes.

- Inference engines: Enable combination and synthesis of multiple fuzzy rules to derive actionable conclusions.

The formulation and calibration of these components require iterative experimentation with diverse datasets reflecting typical blockchain operational states. Researchers can employ stepwise methodologies starting from initial hypothesis formulation about system behavior under uncertainty, followed by designing membership functions tailored to specific use cases. Subsequent testing phases involve measuring output consistency against expected outcomes under varying network loads and attack simulations.

This experimental framework aligns well with ongoing advances in blockchain scalability solutions where adaptability plays a pivotal role. By refining approximate reasoning capabilities through systematic trials, researchers enable smarter resource allocation and enhanced security measures tailored to real-world operational complexities. Encouraging exploration into hybrid systems combining probabilistic models with soft evaluation criteria may further elevate analytical precision in decentralized networks.

Implementing Fuzzy Logic In Blockchain

Integrating approximate reasoning frameworks within blockchain architecture enhances decision-making processes where binary outcomes fall short. By employing linguistic variables and membership functions, blockchain nodes can evaluate uncertain or imprecise data, such as transaction validity under varying network conditions or risk levels in smart contract execution. This methodology refines consensus algorithms by accommodating gradations of truth rather than rigid true/false evaluations.

One practical application involves adaptive trust scoring in decentralized identity verification. Here, a membership function assigns degrees of confidence based on behavioral patterns and historical interactions recorded on-chain. The system interprets these scores through a set of inference rules, enabling nuanced access control that surpasses traditional threshold-based mechanisms. Such soft classification models improve resilience against Sybil attacks and identity spoofing.

Technical Foundations and Experimental Insights

At the core, these methodologies rely on defining a domain-specific vocabulary expressed through linguistic terms–such as “high,” “medium,” and “low” risk–and mapping them onto continuous scales via membership functions. This approach transforms qualitative assessments into quantifiable inputs for automated decision modules embedded within blockchain protocols. Implementing such systems requires careful calibration of membership curves to reflect realistic operational parameters observed in experimental network simulations.

An instructive case study examined transaction fee optimization in Ethereum-like environments using this framework. By modeling congestion levels with fuzzy sets and applying rule-based approximations, the protocol dynamically adjusted gas prices to balance throughput and cost-effectiveness. Results demonstrated measurable improvements in transaction inclusion rates during peak demand periods without compromising decentralization principles.

Further experimentation explored consensus mechanism enhancements through graded voting schemes informed by approximate evaluation of validator reliability. Instead of strict majority rule, validators contributed weighted opinions shaped by their historical performance encoded as membership values. This paradigm yielded increased fault tolerance and faster convergence times under adversarial conditions simulated across multiple testnets.

- Define linguistic variables tailored to specific blockchain use cases

- Design membership functions reflecting empirical data distributions

- Create inference engines executing rule-based approximations for transaction validation

- Validate system behavior through iterative testing on live or simulated networks

The interplay between continuous valuation metrics and discrete ledger states invites further research into hybrid architectures blending symbolic reasoning with probabilistic models. Such explorations promise richer interpretability alongside robust performance, charting new directions for intelligent blockchain infrastructures capable of self-adaptive governance under uncertainty.

Designing Fuzzy Decision Models

Begin by defining membership functions that translate continuous input variables into graded degrees of belonging within predefined linguistic categories. For instance, in cryptocurrency risk assessment, volatility can be modeled as a variable with membership functions such as “low,” “medium,” and “high,” each represented by trapezoidal or triangular shapes to capture uncertainty. These functions serve as foundational tools enabling nuanced interpretation beyond binary thresholds, facilitating the formulation of rules that mimic human heuristic judgment.

Next, construct rule bases that combine these linguistic terms through if-then statements reflecting expert knowledge or empirical data patterns. An example includes evaluating investment suitability where inputs like market sentiment and transaction volume interact via conjunctions (“AND”) and disjunctions (“OR”) operators embedded in the inference mechanism. This structure supports graded output conclusions derived from overlapping memberships, thus modeling real-world ambiguity without rigid boundaries.

Implementing Gradual Inference and Aggregation Techniques

The process continues with the inference engine applying compositional operators to aggregate antecedent conditions and derive consequent fuzzy outputs. Methods such as Mamdani or Sugeno inference provide frameworks for this synthesis; Mamdani uses min-max operations ideal for interpretability, while Sugeno employs weighted averages suitable for optimization problems within blockchain governance models. Selecting appropriate defuzzification strategies–centroid or bisector methods–translates these fuzzy results into actionable crisp decisions, critical when automating smart contract triggers.

Experimental validation involves iterative tuning of membership parameters and rule weights guided by performance metrics like accuracy in classification or prediction error rates. Case studies in decentralized finance demonstrate how adaptive adjustment improves model responsiveness to volatile market variables. Integrating feedback loops enhances robustness by continuously refining linguistic partitions aligned with emerging data trends, thereby evolving decision frameworks capable of addressing complex uncertainties inherent in distributed ledger environments.

Handling Uncertainty In Smart Contracts

Incorporating graded membership functions into smart contracts enables handling ambiguous input variables that traditional binary conditions fail to address. By assigning degrees of belonging rather than strict true/false values, contract clauses can accommodate partial truths, which is particularly useful in scenarios such as credit scoring or insurance claim assessments where data may be incomplete or imprecise.

Utilizing linguistic variables within contract parameters transforms qualitative terms like “high risk” or “moderate delay” into quantifiable ranges, allowing automated decision-making frameworks to process nuanced information. This approach reduces rigid thresholds and introduces flexibility in enforcement criteria, improving fairness and adaptability in decentralized applications.

Implementing Gradual Evaluation Methods in Decentralized Protocols

Decentralized platforms benefit from integrating multi-valued evaluation techniques that mimic human-like judgment under uncertainty. For example, a payment release condition might consider the degree of fulfillment of service level agreements by measuring performance metrics against fuzzy sets rather than crisp cutoffs. This method supports more granular control and mitigates disputes arising from borderline cases.

An experimental setup involves defining membership functions for key performance indicators (KPIs) relevant to the contract’s purpose. Developers can then construct rule bases using these functions combined with inference engines that compute output confidence levels. Testing this model on blockchain testnets reveals how gradual truth assignments influence contract outcomes over multiple iterations.

The application of soft computing principles extends to risk assessment modules embedded within smart contracts. By employing gradated reasoning mechanisms that evaluate various risk factors–such as market volatility, transaction latency, or counterparty reliability–contracts can autonomously adjust obligations or trigger alerts when uncertainty surpasses predefined thresholds. Such dynamic responses enhance resilience without requiring manual intervention.

This framework invites further experimentation by adjusting membership parameters and observing behavioral shifts in contract execution across diverse network conditions. Researchers are encouraged to explore hybrid models combining probabilistic data with graded membership evaluations to capture complex uncertainties inherent in real-world deployments.

Integrating Fuzzy Systems With Consensus

Implementing membership functions within consensus algorithms allows for nuanced decision-making processes that accommodate uncertainty inherent in decentralized networks. By defining linguistic variables such as trustworthiness, latency, or node reliability with degrees of belonging rather than binary states, consensus mechanisms can adjust their thresholds dynamically. This approach mitigates rigid failures in traditional protocols by providing a continuous scale upon which validation confidence can be evaluated and aggregated.

The core function of these adaptable frameworks lies in translating imprecise input data from network participants into evaluative scores that influence block acceptance. For example, instead of categorizing validators simply as honest or dishonest, a membership curve quantifies varying levels of honesty based on historical behavior metrics. This gradation supports more resilient fault tolerance models and reduces vulnerability to Sybil attacks by weighting votes according to membership values rather than equal shares.

Experimental implementations reveal that embedding such graded assessments within Practical Byzantine Fault Tolerance (PBFT) variants improves throughput under fluctuating network conditions. When a consensus node treats latency or message loss rates as fuzzy variables, it can apply inference rules that approximate real-world networking conditions more effectively than crisp logic systems. These enhanced protocols demonstrate smoother convergence times and fewer rollback events during transient partitions.

To construct these inference mechanisms, developers define sets of linguistic terms tied to system parameters–for instance, “high,” “medium,” and “low” network load–with corresponding membership functions shaped by empirical measurements. Aggregation operators then combine multiple inputs through fuzzy conjunctions or disjunctions to yield final trust scores used in leader election or quorum verification stages. Such designs are particularly valuable for permissioned ledgers where participant reputations evolve continuously.

Future research suggests combining adaptive fuzzification layers with machine learning models trained on blockchain telemetry could further refine consensus accuracy. By continuously tuning membership functions based on observed transaction validation success rates and network topology changes, autonomous adjustment becomes feasible. This hybrid methodology promises not only increased robustness but also the capacity to self-optimize governance parameters without manual intervention.

Optimizing Data Validation Using Fuzziness: Final Insights

Implementing variable membership functions tailored to linguistic parameters enhances data validation frameworks by enabling nuanced classification beyond binary thresholds. Such gradation supports decision-making processes where input uncertainty prevails, allowing for smoother integration of diverse data sources with inconsistent quality.

Incorporating flexible inference mechanisms that interpret overlapping sets facilitates better handling of ambiguous or partially conflicting information. For example, assigning degrees of conformity to transaction legitimacy within blockchain environments reduces false positives in fraud detection without sacrificing sensitivity to anomalous patterns.

Key Technical Considerations and Future Directions

- Dynamic Membership Adaptation: Refining the shape and scale of membership curves in response to real-time data variability can improve validation accuracy while preserving computational efficiency.

- Linguistic Variable Expansion: Enriching the vocabulary used in input variables with context-aware terms provides a richer semantic framework, fostering more intuitive rule construction and interpretability.

- Multi-Function Integration: Employing composite functions that combine triangular, trapezoidal, and Gaussian profiles allows optimized representation of diverse uncertainty types inherent in decentralized ledger inputs.

- Hybrid Analytical Models: Merging this approach with probabilistic models or machine learning classifiers could yield hybrid engines capable of deeper insight extraction from noisy datasets.

This methodology advances validation protocols by embracing gradual transitions between states rather than rigid classifications, thereby aligning computational models closer to human-like evaluative processes. The experimental calibration of membership parameters invites further exploration into adaptive algorithms that self-tune according to evolving network conditions.

The path forward involves systematic trials integrating these principles within smart contract verification modules and distributed consensus algorithms. Investigations into optimizing membership function parameters for specific blockchain use cases promise not only improved integrity checks but also enhanced scalability through reduced overheads in complex transaction networks.