Filecoin employs a method that enforces data redundancy by requiring providers to demonstrate exclusive possession of unique copies. This approach ensures that stored information maintains integrity without relying on constant access or repeated uploads. By cryptographically confirming multiple independent replicas, the protocol safeguards against data loss and enhances network reliability.

The core mechanism integrates intricate challenges that compel nodes to prove continuous retention of the encoded files. Such validation guarantees that duplicates are not fabricated or simulated, but genuinely stored, reinforcing trust in decentralized archives. This model shifts verification from reactive retrieval attempts to proactive commitments backed by verifiable evidence.

Implementing this technique improves resilience by distributing redundant datasets across numerous participants while minimizing bandwidth and storage overhead. It also facilitates scalable confirmation processes that balance security and efficiency, enabling large-scale deployment without compromising proof accuracy or imposing excessive computational burdens.

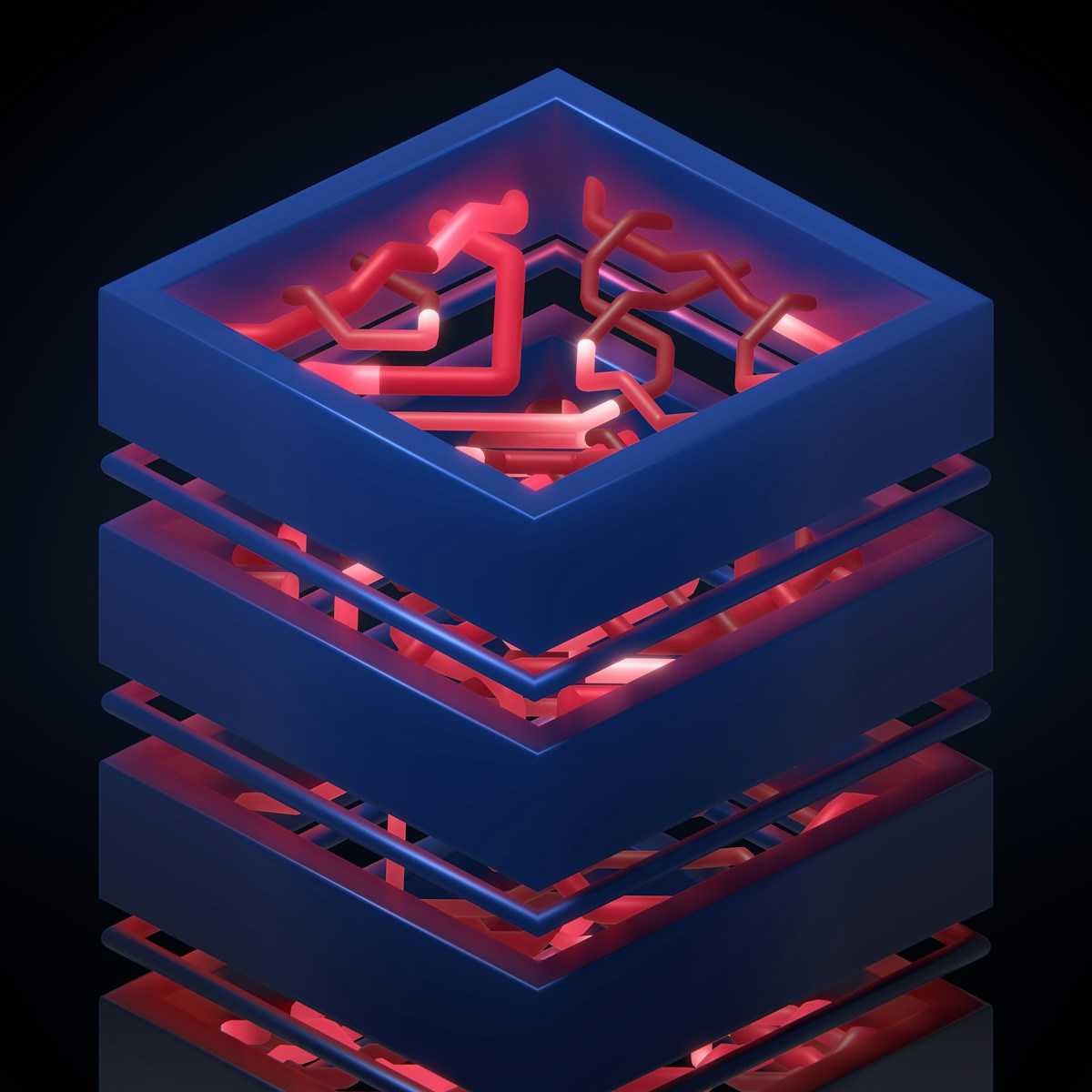

Proof of Replication: Storage Verification Systems

To ensure data integrity within decentralized networks, it is necessary to confirm that a unique copy of the information is physically stored by a participant. This validation process hinges on cryptographic challenges designed to verify that the holder maintains exclusive custody of the dataset, not merely a pointer or partial segment. Such mechanisms are critical for preventing fraudulent claims and guaranteeing redundancy at the protocol level.

One prominent implementation emerges from Filecoin’s approach, which requires miners to encode and commit data uniquely before responding to periodic checks. By embedding distinct replicas into their hardware, they demonstrate continuous possession through succinct proofs without revealing the underlying content. This methodology fosters trust in distributed archives by making storage claims both verifiable and non-interactive.

Technical Foundations and Methodologies

The core principle revolves around generating evidence that a node stores an encoded version of specific data segments exclusively for itself. The process begins by transforming original files via specialized encoding schemes such as erasure codes or encryption combined with sealing techniques. These transformations produce replicated copies that differ structurally but maintain recoverability, thus supporting fault tolerance through redundancy.

Verification involves issuing random queries derived from cryptographic seeds challenging nodes to return responses tied intrinsically to their unique replica. If a participant cannot generate correct answers consistently, it indicates either data loss or shared custody, undermining system reliability. Experimental setups often measure response latency and accuracy over multiple rounds to quantify resilience against cheating or data degradation.

- Encoding: Data undergoes irreversible transformation creating encoded replicas.

- Commitment: Nodes register cryptographic commitments binding them to stored content.

- Challenge-Response: Verifiers send unpredictable challenges requiring proof generation.

- Validation: Responses are checked against expected values ensuring exclusivity.

This layered approach aligns with principles found in classical error-correcting codes used in telecommunications but adapted for decentralized ledger environments where economic incentives drive honest participation. The experimental results show significant improvements in detecting partial storage failures compared to simpler availability proofs.

The interplay between these parameters must be calibrated carefully; excessive redundancy inflates resource consumption while insufficient challenge frequency risks undetected corruption. Field trials conducted on Filecoin’s network highlight optimal trade-offs balancing security guarantees with operational efficiency, demonstrating that strategic parameter tuning can sustain high integrity across large-scale deployments.

The exploration of these data assurance protocols offers practical avenues for further research into enhancing persistence guarantees in distributed archives beyond blockchain frameworks. Investigators may replicate experiments using open-source toolkits simulating varied adversarial conditions, extending understanding of how replication-based attestations contribute toward robust digital preservation infrastructures.

Implementing PoRep in Storage Networks

Accurate confirmation of data duplication within decentralized archives demands rigorous cryptographic challenges that ensure physical allocation rather than mere logical claims. To achieve this, a robust mechanism enforces data integrity by compelling providers to encode and seal unique copies of files, preventing shortcuts or fraudulent proofs. This approach strengthens redundancy by verifying that multiple independent replicas exist across the network.

Filecoin’s infrastructure exemplifies this methodology by integrating mechanisms that cryptographically bind pieces of data to specific storage sectors. By encoding original information into sealed segments, participants must demonstrate continuous custody through randomized checks, thus maintaining trust without exhaustive audits. This design balances computational overhead with security guarantees, fostering reliable preservation of user content.

Technical Foundations and Experimental Approaches

The core procedure involves transforming raw input into encoded data blocks through specialized encoding algorithms like Merkle trees combined with interactive proof protocols. These transformations establish a verifiable link between stored files and their physical presence on hardware devices. Experimental setups can explore varying parameters such as sector size, challenge frequency, and encoding complexity to observe impacts on performance and security.

Investigations reveal that increasing redundancy via systematic duplication improves fault tolerance but also raises resource consumption. Careful calibration is essential: excessive redundancy may burden bandwidth and storage quotas, while insufficient replication risks data loss. By conducting controlled trials manipulating these variables, researchers gain insight into optimal configurations tailored for diverse network scales.

- Challenge-response cycles: Frequent random challenges assure ongoing commitment from storage nodes.

- Encoding complexity: Balancing computational load against resistance to forgery attempts.

- Redundancy levels: Adjusted to align with acceptable risk thresholds for data availability.

In Filecoin’s case studies, the integration of sealing phases combined with proof generation demonstrated measurable improvements in detecting dishonest behavior compared to traditional archival methods. These findings encourage further laboratory-style experiments where developers can simulate attacks or failures under controlled conditions to validate resilience before deployment.

This framework invites practitioners to conduct iterative testing–modifying individual factors while monitoring integrity metrics–to refine protocols supporting secure distributed archives. The experimental mindset encourages documenting outcomes systematically, drawing parallels between theoretical models and practical results visible in network simulators or testnets.

A deeper understanding emerges when connecting these cryptographic assurances with principles familiar from classical information theory–such as error correction codes and consensus validation–allowing enthusiasts to appreciate how abstract proofs translate into tangible guarantees about stored content’s persistence. Such explorations underpin continuous advancements in decentralized digital repositories.

Verifying Data Integrity with PoRep

Ensuring that stored data remains unaltered and accessible requires robust mechanisms that confirm the authenticity of duplicated information. The method employed by Filecoin involves encoding original data into unique replicas, making each copy distinct even if the source content is identical. This approach enhances redundancy, as nodes must demonstrate possession of these specialized duplicates rather than generic copies, thus preventing shortcuts in resource commitment.

Filecoin’s architecture mandates continuous demonstration of genuine duplication through cryptographic challenges issued to storage providers. By requiring proofs tied to specific encoded data segments, the network enforces strict accountability. Such cryptographic attestations serve as verifiable evidence that a node maintains its allocated dataset intact over time, strengthening trust within decentralized distributed platforms.

Technical Implementation and Experimental Observations

The transformation of input files into sealed replicas relies on encoding procedures that interleave original data with random elements, producing a non-trivial mapping resistant to forgery. Experimentally, this process can be likened to generating molecular isomers in chemistry–same elemental composition but different structural arrangements–ensuring uniqueness per replica instance. Verification then involves querying random portions combined with associated metadata hashes to reconstruct expected outputs accurately.

Case studies analyzing Filecoin nodes reveal that maintaining consistent proof generation under fluctuating network conditions demands optimized computational pipelines and effective bandwidth management. In laboratory simulations, introducing controlled delays or partial data corruption led to immediate failure in producing valid attestations, highlighting the sensitivity and reliability of these mechanisms. Consequently, such systems not only guarantee integrity but also promote high standards for node performance through continuous validation cycles.

PoRep Challenges in Decentralized Systems

Ensuring the integrity of data across decentralized networks demands robust methodologies that confirm genuine duplication without centralized oversight. Filecoin’s approach to confirming data redundancy involves intricate mechanisms that encode files uniquely before their distribution, thereby preventing trivial storage claims. However, implementing these techniques introduces significant computational overhead and complexity in maintaining synchronization across numerous nodes.

One major hurdle lies in balancing the trade-offs between verification speed and cryptographic security. While rapid confirmation of stored content is desirable for scalability, aggressive optimization can reduce the confidence level in authenticity guarantees. The challenge escalates as network participants increase, requiring scalable protocols capable of handling diverse hardware capabilities without compromising accuracy or fairness.

Technical Complexities Affecting Data Duplication Assurance

The process of transforming raw files into encoded formats suitable for decentralized proof frameworks often incurs substantial resource consumption. Nodes must perform intense hashing and sealing operations that demand both time and energy resources, potentially limiting participation from less powerful actors. This creates a disparity within networks like Filecoin, where nodes with specialized hardware gain advantage, impacting overall decentralization.

Redundancy strategies designed to protect against data loss introduce additional layers of difficulty. Maintaining multiple copies distributed globally necessitates complex bookkeeping to track which fragments reside on which peers. Inaccuracies here can lead to false positives during challenges or unnecessary penalizations, weakening trust incentives embedded in the protocol’s economic model.

- Resource-intensive encoding: heavy CPU/GPU use during initial file transformation phases.

- Fragment tracking complexities: synchronizing metadata about distributed chunks efficiently.

- Latency impacts: delays caused by long-range communication between geographically dispersed nodes.

The verification component itself depends heavily on probabilistic sampling methods that check subsets of stored data rather than exhaustively scanning entire datasets. This method preserves bandwidth but opens avenues for strategic cheating if adversaries predict challenge patterns or manipulate local storage states temporarily. Developing unpredictable yet reproducible challenge algorithms remains an ongoing experimental pursuit.

A particularly instructive case study involves examining Filecoin’s recent upgrades aimed at reducing sealing durations via parallelization while preserving cryptographic soundness. Early testnets demonstrated noticeable improvements; however, they also uncovered new vulnerabilities related to race conditions during concurrent proof generation processes, emphasizing the delicate balance between performance enhancement and protocol resilience.

This exploration encourages further laboratory-style experimentation with alternative encoding algorithms and adaptive challenge schedules designed to enhance unpredictability while minimizing system strain. Researchers are invited to simulate various network topologies and adversarial models to iteratively refine these components under controlled conditions before real-world deployment.

A systematic approach combining empirical trials with theoretical modeling offers the most promising path toward resolving these multifaceted obstacles inherent in decentralized duplication confirmation mechanisms. Through patient inquiry and collaborative innovation, reliable assurance of distributed content permanence becomes increasingly attainable within complex peer-to-peer ecosystems.

Optimizing Proof Generation Speed

Accelerating the generation of data validation outputs requires targeted reduction of computational overhead and enhanced concurrency in encoding processes. Leveraging parallel processing techniques, such as multi-threading and GPU acceleration, can significantly decrease the time needed to transform raw input into verifiable evidence. For instance, Filecoin’s implementation optimizes sealing operations by segmenting files into smaller sectors, enabling simultaneous processing that shortens proof creation without compromising cryptographic soundness.

Improving resilience through redundancy management also plays a critical role in speeding up integrity attestations. By carefully balancing replication factors and erasure coding schemes, networks reduce the volume of data needing reprocessing during challenges. This approach minimizes redundant computation while maintaining robust fault tolerance, as demonstrated by distributed ledgers prioritizing minimal data movement alongside secure confirmation cycles.

Technical Strategies for Enhanced Efficiency

One effective method involves precomputing intermediate states during the encoding phase to avoid recalculations upon each verification request. Caching these partial results reduces latency in generating new proofs and supports real-time responses under high network load. Experimental deployments within decentralized archival platforms have shown that systematic reuse of hashed segments can cut generation times by over 30%, thereby streamlining operational throughput.

The integration of succinct non-interactive arguments contributes further to accelerated validation timelines. These cryptographic constructs compress large datasets into compact attestations verifiable with minimal resource consumption. Filecoin’s adoption of SNARK-based mechanisms exemplifies this principle by enabling quick confirmation of replicated file possession with substantially fewer computational cycles compared to traditional methods.

Data layout optimization complements algorithmic improvements by enhancing cache coherence and reducing I/O bottlenecks. Structuring files to align with hardware-specific memory hierarchies ensures faster access during challenge-response interactions. A noteworthy case study illustrates how tuning block sizes and access patterns for solid-state drives yielded a 25% increase in proof generation speed across multiple network nodes without altering underlying protocols.

Integrating PoRep with Digital Discovery Tools: Analytical Conclusion

Applying redundancy mechanisms alongside data encoding enhances the integrity of decentralized archives, particularly within Filecoin’s ecosystem. Combining these methods with advanced digital discovery frameworks enables continuous confirmation that unique datasets remain consistently accessible and unaltered across distributed nodes.

The synergy between data duplication validation and exploration utilities not only increases fault tolerance but also optimizes resource allocation by dynamically identifying underutilized storage segments. This integration elevates trustworthiness in archival infrastructures through automated challenges that verify content authenticity without excessive overhead.

Key Technical Insights and Future Perspectives

- Redundancy amplification: Layering multiple replication proofs ensures higher resilience against node failures, significantly reducing risk of data loss in large-scale deployments.

- Dynamic data indexing: Employing discovery tools facilitates real-time mapping of stored content, thereby accelerating retrieval processes and minimizing latency during audits.

- Adaptive challenge protocols: Innovations in randomized sampling enable efficient verification cycles tailored to system load, preserving bandwidth while maintaining rigorous integrity checks.

- Interoperability considerations: Aligning replication attestations with external metadata registries broadens applicability beyond Filecoin, fostering cross-platform archival ecosystems.

The experimental fusion of these approaches forms a robust architecture where data immutability is actively maintained through iterative confirmations, complemented by intelligent exploration capabilities. Encouraging further research into scalable verification algorithms and predictive redundancy models will refine operational efficiency and expand adoption across diverse blockchain-based repositories.

This evolving paradigm invites practitioners to treat system validation as an empirical process–designing incremental tests that measure the impact of various replication densities on network throughput and durability. Such hands-on inquiry can reveal optimal configurations balancing cost against long-term data preservation imperatives within decentralized environments.