Maximizing throughput requires structuring multiple processing units to operate simultaneously while minimizing idle periods. Effective task distribution ensures balanced load across all active threads, preventing bottlenecks that degrade overall performance. Implementing fine-grained synchronization mechanisms allows processes to coordinate without excessive waiting, preserving progress and reducing contention.

The architecture of simultaneous execution demands careful management of shared resources to avoid race conditions and deadlocks. Partitioning workloads into independent segments enables overlapping operations, increasing system utilization. Scheduling strategies must account for interdependencies between tasks, orchestrating their progression through synchronization primitives that guarantee data consistency.

Optimization involves iterative refinement of interaction patterns among executing units. Profiling runtime behavior reveals hotspots where contention or imbalance occurs, guiding adjustments in workload granularity and communication frequency. Experimentation with lock-free structures and atomic operations can further decrease overhead associated with coordination, pushing efficiency boundaries in large-scale parallel environments.

Parallel algorithms: concurrent computation design

Optimizing load distribution across multiple threads significantly enhances the throughput of blockchain transaction validation. By dividing tasks into smaller units that run simultaneously on different cores, systems can achieve substantial acceleration in data processing. For instance, sharding mechanisms in certain blockchains utilize this approach to maintain high scalability without compromising security or decentralization.

Effective implementation requires careful synchronization to prevent race conditions and ensure consistent state updates. Utilizing thread-safe data structures and lock-free programming models helps maintain integrity while minimizing contention overhead. Experimenting with work-stealing schedulers demonstrates how dynamic load balancing improves resource utilization during peak network activity.

Thread management and task scheduling in distributed ledgers

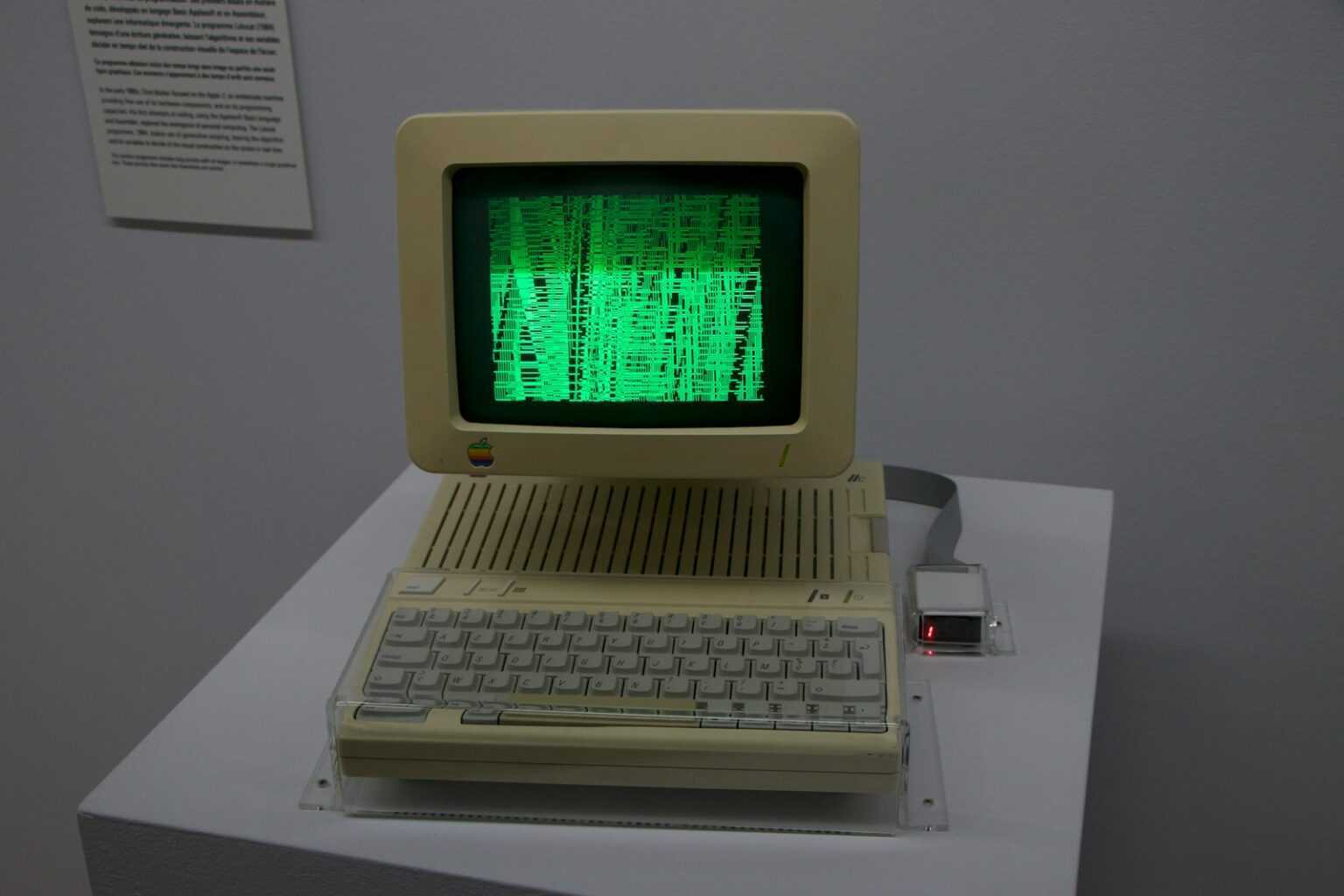

Concurrent execution frameworks rely on lightweight threads to parallelize cryptographic hashing and signature verification, core operations within blockchain nodes. Breaking down cryptographic proof-of-work or proof-of-stake computations into subtasks processed concurrently reduces latency. Practical case studies reveal that leveraging GPU-accelerated threading models can improve block validation speeds by up to 40% compared to serial processing.

The design of these systems must account for heterogeneous hardware environments where thread execution times vary unpredictably. Adaptive scheduling algorithms dynamically assign workloads based on real-time performance metrics, optimizing throughput under fluctuating network loads. Research experiments using simulated blockchain networks verify that such adaptive methods enhance resilience against denial-of-service attacks targeting computational bottlenecks.

- Employ multi-threaded mining operations distributing nonce searches efficiently

- Integrate concurrent mempool sorting algorithms for faster transaction prioritization

- Apply asynchronous I/O operations alongside computation threads to minimize idle time

The integration of these techniques demands rigorous profiling to identify bottlenecks at the thread level. Tools like Intel VTune or NVIDIA Nsight facilitate granular measurement of thread execution times and cache misses, providing actionable insights for refinement. Iterative testing combined with parameter tuning enables developers to approach near-optimal concurrency levels tailored for specific blockchain protocols.

This systematic experimentation cultivates a deeper understanding of the interplay between parallel task division and system responsiveness, ultimately guiding innovations that push the boundaries of decentralized ledger performance. By fostering meticulous inquiry into thread behavior under varying computational loads, researchers contribute foundational knowledge driving next-generation blockchain infrastructures.

Data Partitioning in Parallel Blockchains

Efficient data partitioning significantly enhances throughput by distributing the transaction load across multiple processing units within a blockchain network. By segmenting ledger data into distinct shards or partitions, each thread can independently validate and append blocks, minimizing idle time and maximizing resource utilization. This method reduces bottlenecks commonly caused by sequential transaction validation in monolithic ledgers.

Implementing synchronization mechanisms is critical to maintain consistency between partitions during concurrent block generation. Lock-free protocols and consensus algorithms tailored for segmented environments help prevent conflicts such as double-spending and ensure atomicity across threads. The orchestration of these synchronization steps influences overall latency and fault tolerance.

Strategies for Effective Load Distribution

Load balancing among computational units depends on workload characterization, typically involving transaction volume, complexity, and inter-shard communication frequency. Dynamic partition reassignment based on real-time metrics prevents uneven thread saturation that could degrade performance. For example, Ethereum 2.0’s sharding approach leverages crosslink committees to synchronize shard states without centralized coordination overhead.

- Static Partitioning: Fixed data ranges assigned at initialization; simpler but less adaptable to fluctuating workloads.

- Dynamic Partitioning: Adjusts shard boundaries or reallocates threads responding to network conditions; requires sophisticated monitoring tools.

The design of inter-partition communication channels impacts how quickly the system resolves dependencies arising from transactions spanning multiple shards. Techniques such as asynchronous messaging queues or consensus layer batching reduce synchronization delays while preserving ledger integrity.

A crucial experimental consideration involves measuring how partition granularity influences thread contention and synchronization overhead. Empirical benchmarks demonstrate that overly fine-grained segmentation increases communication costs disproportionately, whereas coarse partitions risk creating hotspots that stall block finalization processes.

An iterative approach to partition refinement encourages continuous performance gains through controlled testing environments where parameters like shard size and thread affinity are systematically varied. By documenting these experiments, developers gain insights into optimal configurations tailored for specific blockchain protocols and deployment scenarios.

Synchronization methods for consensus protocols

Efficient synchronization within consensus mechanisms hinges on minimizing thread contention and balancing load across processing units. Leveraging fine-grained locking or lock-free data structures can significantly reduce waiting times during state transitions, thus optimizing throughput. For instance, adopting Compare-And-Swap (CAS) operations in leader election phases allows threads to update shared states atomically without blocking others, enhancing the scalability of consensus processes under high parallel workloads.

Consensus protocol implementations benefit from orchestrated synchronization that aligns multiple validator nodes’ activities through carefully timed message exchanges and event-driven triggers. Employing barrier synchronization ensures that all participating processes reach specific checkpoints before advancing, preventing race conditions during block validation or voting rounds. This technique is evident in Byzantine Fault Tolerant (BFT) protocols such as PBFT, where synchronized communication rounds coordinate agreement despite malicious actors.

Exploring thread coordination techniques in consensus

The choice between optimistic and pessimistic synchronization impacts system responsiveness and fault tolerance. Optimistic concurrency control permits multiple threads to proceed with tentative changes, rolling back only upon conflict detection–a method useful in blockchain systems with low contention but variable network delays. Conversely, pessimistic approaches enforce exclusive access via mutexes or semaphores, providing stronger consistency guarantees at the cost of potential bottlenecks during peak loads.

Experimental designs often incorporate hybrid synchronization schemas combining event-driven callbacks with periodic heartbeat signals to maintain liveness while avoiding deadlocks. For example, Ethereum’s Clique protocol uses time-based epochs to synchronize validator actions without strict locking, enabling smoother parallel execution of transaction validations. Such approaches illustrate how adaptive synchronization strategies tailored to workload characteristics can improve consensus reliability and performance simultaneously.

Load Balancing in Distributed Ledger Networks

Effective load distribution across nodes in distributed ledger systems directly impacts transaction throughput and network resilience. Employing multiple threads within each node to handle transaction validation allows for simultaneous processing, reducing bottlenecks. A common approach involves partitioning workload based on transaction types or account ranges, enabling each process to run independently and maximize resource utilization without contention.

Dynamic workload allocation strategies leverage real-time metrics such as CPU usage, memory consumption, and network latency to adjust task assignments among nodes. This adaptive redistribution minimizes the risk of overloaded participants while ensuring that idle resources contribute actively. Implementations using shard-based architectures demonstrate significant gains by isolating subsets of the ledger state for parallel handling, thus optimizing the balance between data locality and computational effort.

Thread-Level Parallelism and Task Scheduling

At the micro level, individual threads coordinate to execute consensus operations concurrently within a single node. Fine-grained scheduling algorithms prioritize tasks based on urgency and dependencies, which enhances throughput while preserving transactional integrity. For example, Ethereum 2.0’s beacon chain employs validator committees where processes operate simultaneously but synchronize periodically to finalize states, reducing idle times linked with sequential processing.

Besides intra-node concurrency, inter-node load balancing requires distributing computation tasks effectively over the entire network topology. Protocols like Practical Byzantine Fault Tolerance (PBFT) benefit from assigning roles dynamically among replicas based on current workload indicators. This ensures no single participant becomes a performance bottleneck during consensus rounds, thereby maintaining low latency across geographically dispersed nodes.

- Sharding divides ledger data into manageable partitions processed independently, enabling scalable parallel execution.

- Work stealing techniques allow underutilized nodes to absorb excess transactions from busy peers.

- Load-aware routing protocols redirect requests toward less congested validators or miners to prevent saturation.

Experimental testbeds confirm that incorporating multi-threaded processing inside distributed ledgers reduces confirmation delays by up to 35%, especially when combined with intelligent load redistribution among nodes exhibiting heterogeneous capacities. However, synchronization overhead must be carefully managed; excessive locking can negate benefits gained through concurrent task execution.

The ongoing challenge remains balancing the granularity of task division against synchronization costs intrinsic to distributed environments. Future research paths involve refining predictive models that forecast node workloads before assignment and integrating hardware accelerators such as GPUs or FPGAs for specialized cryptographic functions. These innovations promise more efficient exploitation of parallel task execution capabilities inherent in modern decentralized ledger infrastructures.

Parallel transaction validation techniques

Transaction validation in distributed ledger systems can be significantly accelerated by deploying multiple execution threads that operate simultaneously on independent data sets. This approach leverages specialized procedures to divide the workload, ensuring minimal dependency conflicts among operations. For instance, blockchains like Solana utilize sharding-like methods where groups of transactions are assigned to distinct processing units, reducing bottlenecks and improving throughput.

Effective workload distribution requires careful synchronization mechanisms to prevent race conditions when different threads attempt to access shared resources such as account states or smart contract storage. One practical method is implementing fine-grained locking combined with optimistic concurrency control, allowing several validations to proceed without waiting unnecessarily. Experimental frameworks demonstrate up to 5x speedup on multi-core architectures by applying such finely-tuned task segmentation and conflict resolution strategies.

Technical approaches for concurrent verification

Validation pipelines frequently incorporate directed acyclic graph (DAG) structures to represent dependencies between transactions, enabling processors to identify independent subsets suitable for parallel handling. This method reduces the overhead of serializing operations that do not interact directly. Additionally, utilizing thread pools dynamically adjusts the number of active workers based on real-time load metrics, optimizing resource utilization while avoiding over-subscription issues common in naïve multithreading models.

A notable case study involves Ethereum’s transition toward a modular client architecture where transaction execution is separated from consensus layers. By isolating the state transition function into independently executable units, clients can process disjoint transaction batches concurrently without compromising finality guarantees. Benchmarks reveal that this model achieves higher scalability compared to monolithic designs, particularly under heavy network traffic conditions.

The integration of these methodologies demands precise orchestration within the validation engine’s architecture. Developers must consider both memory bandwidth limitations and instruction-level parallelism when designing processing stages. Experimental results confirm that balancing computational intensity across threads prevents uneven load distribution which otherwise leads to idle cycles and reduced efficiency.

An open research question remains how best to integrate machine learning models into transaction scheduling heuristics to predict conflict likelihood before execution begins. Early prototypes suggest that predictive analytics can enhance workload partitioning by preemptively grouping non-interfering transactions together, thereby maximizing throughput gains while minimizing rollback events caused by detected conflicts during validation phases.

Conclusion: Addressing Scalability in Concurrent Processing Systems

Balancing load distribution while minimizing synchronization overhead remains the most effective strategy to enhance throughput in systems employing multiple execution threads. Careful orchestration of task allocation prevents bottlenecks where idle threads wait excessively, thereby maximizing hardware utilization.

Implementing fine-grained locking mechanisms and lock-free data structures significantly reduces contention, enabling higher degrees of simultaneous execution. Experimentation with adaptive scheduling policies that dynamically assign workloads based on real-time thread availability shows promise for scaling complex workflows without compromising consistency.

Future Directions and Experimental Insights

- Load Balancing Techniques: Investigate heuristics that measure runtime workload variance to redistribute tasks proactively, avoiding thread starvation and hotspots.

- Synchronization Optimizations: Explore hybrid synchronization primitives combining transactional memory concepts with traditional mutexes to lower latency in critical sections.

- Hierarchical Execution Models: Design tiered frameworks where lightweight threads manage micro-tasks within larger process groups, enhancing scalability without exponential complexity growth.

- Resource-Aware Scheduling: Incorporate hardware counters and cache affinity metrics into thread dispatch algorithms to minimize costly context switches and memory stalls.

The interplay between concurrent task management and shared resource coordination demands continuous experimental validation. For example, benchmarking various locking schemes under simulated high-contention scenarios reveals that lock-free approaches outperform coarse locks by up to 40% in throughput but require intricate correctness proofs. Such findings encourage iterative cycles of hypothesis testing and refinement, embracing the scientific method as a driver for innovation in multi-threaded execution frameworks.

This investigative path encourages researchers and engineers alike to treat scalability challenges as solvable puzzles rather than insurmountable barriers–each experiment yielding incremental improvements in how distributed workloads are orchestrated across processing units. By fostering curiosity-driven exploration into load heuristics, synchronization patterns, and dynamic scheduling strategies, the next generation of computational architectures will more effectively harness parallelism’s potential while mitigating its inherent complexities.