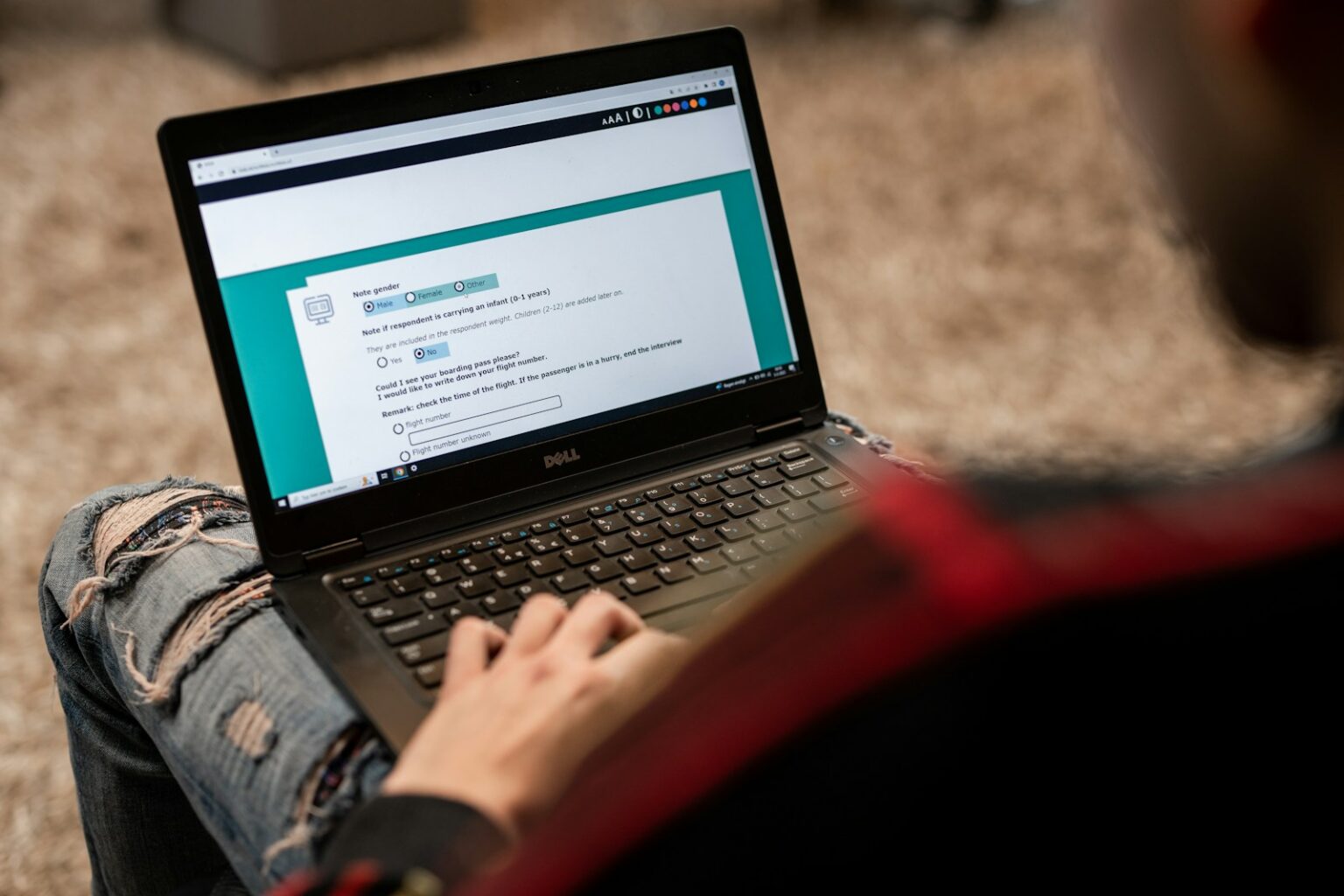

Begin by selecting a systematic procedure that aligns with your study’s objectives and data characteristics. Prioritize methods validated through peer-reviewed sources to ensure reliability and reproducibility. Establish clear criteria for variable selection, measurement scales, and data segmentation to enhance clarity during subsequent analyses.

Integrate quantitative and qualitative techniques when applicable, allowing triangulation of findings and robust interpretation. Develop an interconnected structure that links hypotheses, data collection strategies, and evaluation metrics logically. This structural coherence facilitates transparent tracking of analytical decisions throughout the investigative process.

Utilize iterative refinement cycles based on preliminary results and peer feedback to optimize the chosen approach. Document assumptions explicitly and incorporate sensitivity checks to assess the stability of conclusions under varying conditions. Effective planning at this stage reduces ambiguity and strengthens the scientific rigor of your examination.

Research methodology: analytical framework design

Effective examination of blockchain tokens requires a structured approach that integrates clear criteria and systematic evaluation procedures. Initiating an inquiry with a well-defined conceptual structure enables precise identification of key variables such as token utility, consensus mechanisms, and economic incentives. Such an arrangement facilitates reproducibility and consistency across multiple case studies, ensuring that findings hold scientific merit.

Peer-reviewed sources contribute significantly to refining investigative models by providing validated data sets and verified outcomes. Incorporating these resources enhances the reliability of comparative analyses between different token architectures, allowing researchers to discern subtle performance distinctions grounded in empirical evidence rather than speculation.

Constructing a Robust Analytical Scheme

A rigorous investigative blueprint must encompass multiple layers of assessment, including qualitative code audits and quantitative network metrics. For instance, evaluating smart contract vulnerabilities alongside transaction throughput provides a comprehensive picture of security and scalability. Employing statistical tools such as regression analysis or cluster algorithms enriches interpretation by revealing hidden correlations within blockchain ecosystems.

Systematic scrutiny often involves iterative cycles of hypothesis testing followed by data validation through simulation or live network monitoring. This cyclical experimentation fosters adaptability while maintaining strict adherence to scientific protocols. An example is assessing token liquidity impact on price volatility using time-series data extracted from decentralized exchanges over specified intervals.

- Step 1: Define evaluation parameters aligned with project objectives.

- Step 2: Collect peer-validated datasets for benchmarking purposes.

- Step 3: Apply computational models to examine behavioral trends.

- Step 4: Perform sensitivity analysis to test robustness under varying conditions.

This methodical procedure ensures that conclusions drawn about token viability rest on quantifiable indicators rather than anecdotal observations. It also encourages transparency and reproducibility which are cornerstones of scientific inquiry within the blockchain domain.

The outlined approach exemplifies how scientific scrutiny within distributed ledger technologies can progress beyond superficial evaluations toward in-depth understanding based on robust data analytics. By framing investigations as replicable experiments, analysts gain confidence in their interpretations while fostering innovation through transparent knowledge exchange.

The continuous refinement of this evaluative schema hinges on integrating emerging findings from cryptographic research, network theory, and economic modeling disciplines. Encouraging collaborative verification via peer collaboration platforms further accelerates discovery cycles and mitigates biases inherent in isolated assessments. Ultimately, this disciplined pursuit shapes a dependable foundation for advancing tokenomics science capable of guiding investment decisions and technological developments alike.

Selecting Variables for Analysis

Effective selection of variables begins with a clear operational definition aligned to the core hypothesis under investigation. Prioritizing measurable, quantifiable factors enhances the precision of subsequent data interpretation. For example, in blockchain performance studies, variables such as transaction throughput, block size, and confirmation latency provide direct metrics tied to network efficiency. Incorporating these specific elements within the evaluative structure sharpens analytical clarity and focuses experimental efforts.

Performing a rigorous literature review serves as an indispensable step to identify variables validated by peer-reviewed studies. This process not only confirms relevance but also prevents redundancy by building on established findings. Scientific papers examining consensus algorithms frequently highlight energy consumption and fault tolerance as critical parameters; integrating such variables ensures alignment with recognized benchmarks. Methodical cross-examination of these sources aids in constructing a robust variable set tailored to precise investigative goals.

Criteria for Variable Inclusion

Variables must satisfy several technical criteria: sensitivity to changes in the system under study, independence from confounding factors, and feasibility of accurate measurement using existing tools or sensors. For instance, analyzing smart contract security vulnerabilities demands inclusion of code complexity metrics alongside transaction frequency counts. This dual-variable approach captures both structural risk and usage patterns, enabling comprehensive assessment within a reproducible experiment framework.

The context-specificity of selected factors dictates their predictive power across diverse blockchain implementations. Experimental designs should incorporate pilot testing phases where preliminary data collection assesses variable responsiveness and interdependence. In decentralized finance (DeFi) platforms, incorporating liquidity pool size and slippage rate as initial variables can reveal nuanced behavioral trends before scaling up analysis scope. Such iterative refinement embodies scientific rigor through empirical validation rather than theoretical assumption.

- Quantitative accuracy: Choose variables measurable with high precision instruments or reliable data sources.

- Operational relevance: Select factors directly impacting system behavior or outcomes under scrutiny.

- Data availability: Confirm accessibility of consistent datasets over required temporal spans.

Integrating multidimensional variables often necessitates normalization procedures to harmonize disparate units or scales–critical when merging economic indicators with cryptographic protocol characteristics. Statistical techniques like principal component analysis (PCA) can reduce dimensionality while preserving explanatory power. A case study on token valuation models demonstrated that combining social media sentiment indices with on-chain activity metrics significantly improved forecast accuracy after appropriate normalization steps were applied.

The final variable selection should remain adaptable throughout the research lifecycle, accommodating emerging insights or anomalies observed during ongoing experimentation. Documenting each modification transparently ensures reproducibility and facilitates peer critique essential for advancing collective knowledge boundaries within blockchain analytics disciplines.

Structuring Data Interpretation Steps

Begin data interpretation by establishing clear criteria for data validation and reliability assessment. Implementing a systematic review of raw datasets ensures that anomalies, inconsistencies, or outliers are identified early. For example, in blockchain transaction analysis, filtering invalid or duplicate entries prior to further examination reduces noise and enhances the accuracy of subsequent conclusions. This step aligns with proven approaches in scientific inquiry where preprocessing forms the foundation for credible outcomes.

Next, apply sequential analytical procedures tailored to the specific objectives of the investigation. These may include statistical summarization, correlation analysis, and hypothesis testing adapted from quantitative techniques common in cryptoeconomics research. For instance, evaluating the impact of network congestion on transaction fees requires isolating variables through controlled comparisons and regression models. Employing peer-reviewed protocols at this stage reinforces methodological rigor and facilitates reproducibility across similar studies.

Recommended Approach to Interpretation Workflow

- Data Screening: Conduct thorough initial evaluation using automated scripts to detect missing or corrupted records.

- Normalization: Standardize metrics such as timestamps or token values to maintain consistency across diverse sources.

- Analytical Modeling: Utilize algorithms like time-series forecasting or cluster analysis to extract patterns from complex datasets.

- Validation: Cross-verify findings against established benchmarks or results from independent research teams.

A case study examining decentralized finance (DeFi) protocols demonstrated how integrating multi-level verification improved detection of anomalous liquidity shifts by over 30%. The layered approach combined real-time monitoring tools with retrospective statistical reviews designed specifically for smart contract interactions. Such structured interpretation not only clarifies causal relationships but also supports dynamic adaptation of investigative strategies based on emerging evidence.

Integrating Theoretical Models Practically

Applying scientific constructs into operational environments requires precise alignment between conceptual postulates and tangible mechanisms. To achieve this, a stepwise approach emphasizes the transition from abstract hypotheses to executable procedures, ensuring that theoretical insights translate into measurable outcomes. A comprehensive investigation begins with formulating clear objectives based on established principles, followed by selecting appropriate techniques to quantify relevant parameters.

The iterative cycle of evaluation and adjustment is paramount for refining these applications. By systematically reviewing empirical data alongside initial assumptions, inconsistencies become evident, guiding recalibration of variables within the chosen investigative paradigm. This process fosters robust validation and enhances predictive accuracy when deploying models in real-world blockchain scenarios.

Practical Steps Toward Model Application

One effective strategy involves breaking down complex systems into modular components amenable to independent scrutiny. For instance, in blockchain consensus algorithms, isolating elements such as transaction validation or network latency facilitates targeted experimentation. Employing quantitative metrics–like throughput rates and fault tolerance thresholds–enables objective assessment against theoretical benchmarks.

- Simulation environments: Utilizing virtual testbeds replicates network conditions without compromising live operations.

- Controlled parameter variation: Adjusting cryptographic difficulty or block size incrementally reveals system sensitivities.

- Data collection protocols: Structured logging ensures traceability for subsequent statistical analysis.

This methodological rigor allows researchers to detect emergent behaviors not predicted solely by theoretical formulations, thereby enriching understanding through practical feedback loops.

A comparative review of case studies illustrates this approach effectively. Consider the deployment of proof-of-stake models in permissioned ledgers where experimental trials demonstrated reduced energy consumption compared to proof-of-work counterparts while maintaining security guarantees. Such findings emerged from meticulous tracking of computational resource usage under varied transaction loads over extended durations.

The structured examination above confirms that embedding theoretical models demands ongoing adaptation influenced by empirical measurements. Researchers must maintain vigilance against oversimplification and ensure that each phase–from hypothesis formulation through execution–adheres strictly to documented procedures for reproducibility.

The successful fusion of conceptual frameworks with operational methodologies thus depends on persistent inquiry and rigorous documentation. Encouraging experimentation under controlled conditions cultivates deeper insight into underlying system dynamics while fostering incremental improvements aligned with original scientific intentions.

Validating Consistency in Analytical Structures

Ensuring internal coherence within a systematic model requires rigorous verification through iterative assessment and cross-validation methods. Employing scientific scrutiny during the evaluation phase reveals discrepancies that may arise from methodological misalignments, data inconsistencies, or logical fallacies embedded in the architecture. For example, applying statistical consistency tests alongside scenario simulations can expose latent conflicts between theoretical constructs and empirical observations.

Integrating comprehensive peer review sessions enhances the reliability of the conceptual apparatus by challenging assumptions and refining procedural steps. Such collaborative critique not only strengthens the structural integrity but also uncovers potential biases introduced during initial formulation. This approach aligns with experimental protocols where reproducibility and transparency dictate confidence levels in outcome interpretation.

Implications for Future Investigations and Implementation

- Adaptive Verification Techniques: Incorporate dynamic validation algorithms capable of adjusting to evolving data streams, particularly relevant in blockchain transaction analysis where real-time accuracy is paramount.

- Hybrid Evaluation Models: Combine quantitative metrics with qualitative feedback mechanisms to capture nuanced inconsistencies that purely numerical methods might overlook.

- Automated Consistency Audits: Deploy AI-driven tools to continuously monitor alignment between successive iterations of the system, reducing manual oversight without sacrificing precision.

The broader consequence of reinforcing reliability within this investigative apparatus lies in advancing trustworthiness across decentralized platforms. As cryptographic protocols mature, their analytical substantiation depends on frameworks capable of self-verification, ensuring that emergent patterns reflect authentic transactional behavior rather than artifacts of flawed design. Encouraging experimental replication empowers practitioners to build upon validated knowledge rather than speculative constructs.

Future developments should emphasize modular construction with embedded checkpoints facilitating incremental validation. By mirroring natural scientific experimentation–where hypotheses undergo staged testing–the process cultivates a robust environment conducive to innovation while safeguarding against systemic errors. This paradigm invites ongoing exploration into automated consistency measures that parallel laboratory rigor applied to complex computational ecosystems.